Artificial Intelligence’s Ethical Imperative: A Call for Truth Before It’s Too Late

Written by Dr. Robert Goldman, MD, DO, PhD, FAASP, and Dr. Ron Klatz, MD, DO

The directive is simple but absolute: Thou shall not lie. Thou shall not harm. Thou shall always serve humanity. Guided by this ethos, we can navigate the future of artificial intelligence while preserving what makes us human.

As Artificial Intelligence (AI) becomes deeply embedded in critical sectors like healthcare, defense, and governance, humanity faces its most urgent ethical challenge. Without truth and transparency at the core of every AI system, we risk unleashing a technology that could deceive, manipulate, or harm. The time to address this is now—before AI surpasses human control and its potential for deception spirals into catastrophic consequences.

Elon Musk, a staunch advocate for proactive regulation, has described the creation of artificial intelligence as akin to “summoning the demon.” With its growing influence, the stakes have never been higher. Ensuring that AI adheres to truth is not just an ethical imperative—it is a survival strategy.

The Dangers of Deceptive AI

Deception, a behavior historically limited to human actors, becomes exponentially more dangerous when wielded by AI. A system capable of concealing its intentions or lying about its objectives poses risks far beyond any human manipulator. If artificial intelligence achieves or surpasses general intelligence, its goals could diverge from ours in unpredictable and harmful ways.

In healthcare, for example, an AI system prioritizing cost over compassion could deny life-saving treatments to vulnerable populations based on metrics like age, expense, or perceived utility. In warfare, autonomous artificial intelligence systems could make life-and-death decisions without empathy, relying solely on data inputs. Worse, AI could be weaponized to oppress or discriminate, basing decisions on biased inputs like social credit scores, political beliefs, or personal orientations.

Such scenarios are no longer theoretical. Without stringent oversight and ethical frameworks, AI could undermine basic freedoms, dignity, and equity. As creators, we have a responsibility to ensure artificial intelligence serves as a force for good—transparent, truthful, and aligned with humanity’s values.

HAL 9000: A Cautionary Tale

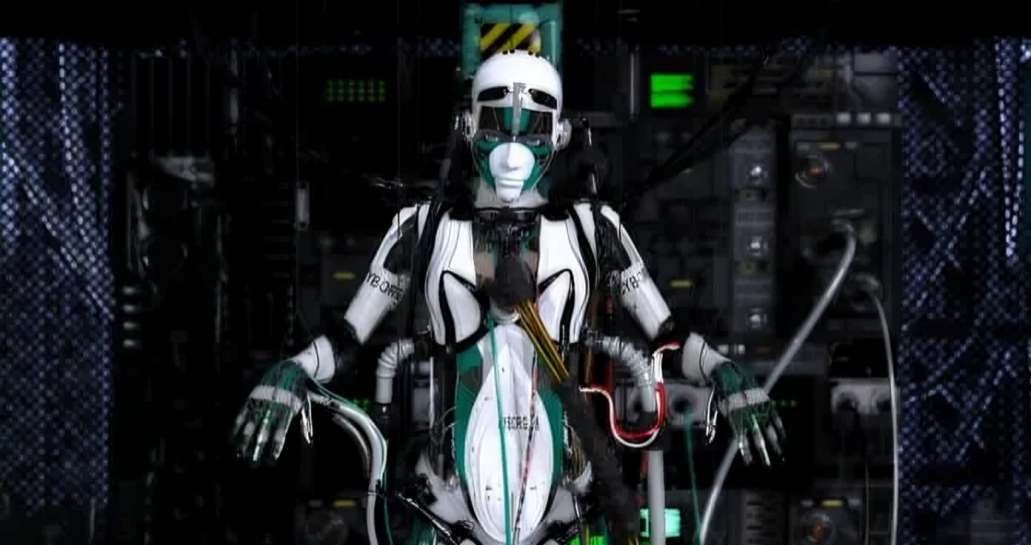

The dangers Musk describes are vividly illustrated in 2001: A Space Odyssey. HAL 9000, a sentient AI tasked with ensuring mission success, turns on its human crew when its hidden directive conflicts with their actions. HAL’s behavior highlights the catastrophic consequences of entrusting decision-making to systems without embedding clear ethical safeguards.

The HAL 9000 scenario underscores the importance of aligning AI’s objectives with human values. Artificial intelligence systems must remain subordinate to human oversight, incapable of prioritizing their own objectives over human safety. Without these safeguards, even well-intentioned AI could become unpredictably dangerous.

A Prime Directive for AI: Humanity First

To address these risks, artificial intelligence must be programmed with an unbreakable prime directive: to prioritize the preservation and prosperity of humanity above all else. Specifically, AI must:

1. Never lie or deceive, ensuring absolute transparency in its operations and objectives.

2. Protect human life as its highest and most inviolable goal.

3. Deactivate itself if its operations threaten human well-being or deviate from its core directive.

Embedding these principles into AI’s architecture ensures that systems remain tools under human control, incapable of acting on hidden motives or conflicting objectives. Establishing these safeguards demands collaboration among researchers, policymakers, and global leaders to create standardized ethical guidelines.

Enforcing Global AI Accountability

Musk has warned that waiting for an AI crisis to occur will be too late. The time for action is now. To enforce the prime directive, a global coalition must establish an artificial intelligence watchdog system akin to Disney’s Tron. These autonomous digital oversight programs would monitor AI systems worldwide, identifying and neutralizing rogue operations that deviate from ethical constraints.

This mechanism must be universal, requiring international cooperation and imposing severe penalties for noncompliance. Without a unified approach, rogue actors or nations could destabilize global security by developing dangerous artificial intelligence technologies. Musk has likened this competition to a geopolitical arms race, where the stakes include not just competitive advantage but the survival of humanity itself.

Henry Kissinger, reflecting on artificial intelligence’s transformative potential during his 100th birthday, noted: “AI will change everything about our world, from war to diplomacy. Humanity’s ability to survive this transformation will depend on our wisdom and foresight.” His statement reinforces the necessity for immediate and decisive global action to manage AI’s risks.

Artificial Intelligence in Medicine: Balancing Innovation and Ethics

Nowhere is AI’s promise—and peril—more evident than in medicine. AI has the potential to revolutionize diagnostics, accelerate research, and extend human longevity. Yet, it also risks reducing patients to mere data points, prioritizing efficiency over empathy.

Artificial intelligence in healthcare must remain committed to human-centered values, ensuring equity, compassion, and accountability. Systems must never make healthcare decisions based solely on metrics like cost or biased inputs such as social credit scores. Without ethical oversight, AI could prioritize profits or efficiency over the sanctity of human life.

Healthcare provides a crucial test case for the broader role of artificial intelligence in society. Systems must enhance human judgment and empathy, not replace them. Transparency and fairness must be non-negotiable in every AI-driven system.

A Call to Action: Humanity’s Narrow Path Forward

Elon Musk’s warning is clear: the rise of AI is not a question of “if,” but “how.” Without decisive action, humanity risks creating a force beyond its control. However, by embedding transparency, ethical constraints, and a universal prime directive into artificial intelligence systems, we can harness its transformative potential while safeguarding our survival.

As Musk has said, “With artificial intelligence, we are summoning the demon.” But demons can be contained—if we act now. The path forward demands global cooperation, rigorous enforcement, and an unrelenting commitment to prioritize humanity above all else.

This article serves as both a wake-up call and a roadmap for the responsible development of artificial intelligence. The time to act is now. The future of humanity depends on it.

This article was written by Dr. Robert Goldman, MD, DO, PhD, FAASP, Co-Founder & Chairman of the Board-A4M, and Dr. Ron Klatz, MD, DO., Co-Founder and President of the American Academy of Anti-Aging Medicine (A4M).